Cost Optimisation on AWS Without Using Tooling

Posted by Chris McKinnel - 2 December 20215 minute read

This week I needed to do a rapid analysis of a new customers AWS account to see if there was any ways to save on their AWS bill. They had indicated they were keen to start using some of the newer AWS services to modernise their operations, but before they could do that they needed to free up some cash.

It needed to be rapid because the request got lost in the ether and they had been waiting for this for a while. Usually I would engage our Cloud Managed Service team and they would look to onboard the customer into the service and hook it up to some tooling like CloudCheckr or CloudHealth, but in this case I didn't have time to wait for sign-off and on-boarding.

It was time to kick it old-school and do a manual analysis of this environment.

Tooling is better

Before we continue, let me just clarify that using some kind of tooling to inspect your AWS environment is much better than doing it manually. I'm sure I missed a bunch of stuff in this analysis, both because I didn't have time to dig super deep, but also because AWS is a complex beast, and remembering where to look to find savings is quite a long list.

There are a heap of tools out there that you can use, and many people have done a much better job than I ever will of collating them. If you're interested, just ask Google.

Let's get started

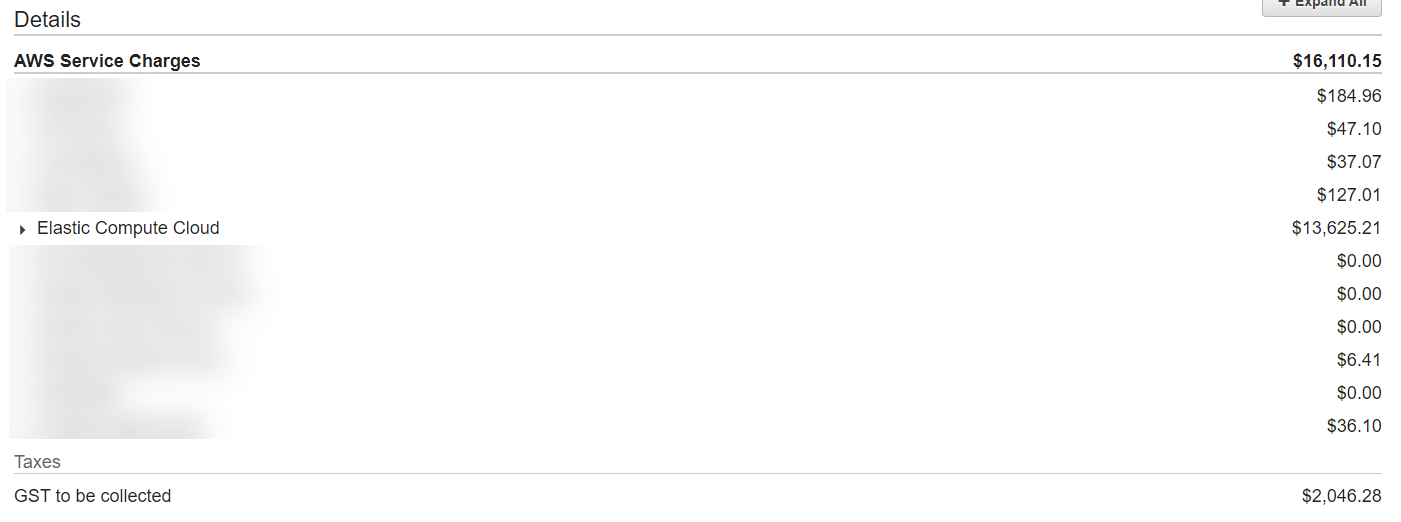

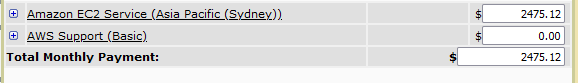

The first step in a manual analysis of an AWS account is to check the billing console to see where all the money is being spent. For this customer the spend was mostly in EC2.

By drilling into it, you can see where most of the money is going.

There are a few red flags already:

- There are old instance family types

- The snapshot storage cost is 3x higher than the EBS storage

- There is no Reserved Instances or Savings Plans

- Most of the spend is from EC2 instances (OK, not a red flag, but good to know)

So, the big ticket items here are probably going to be deleting some old snapshots and right-sizing some EC2 instances.

Snapshot storage

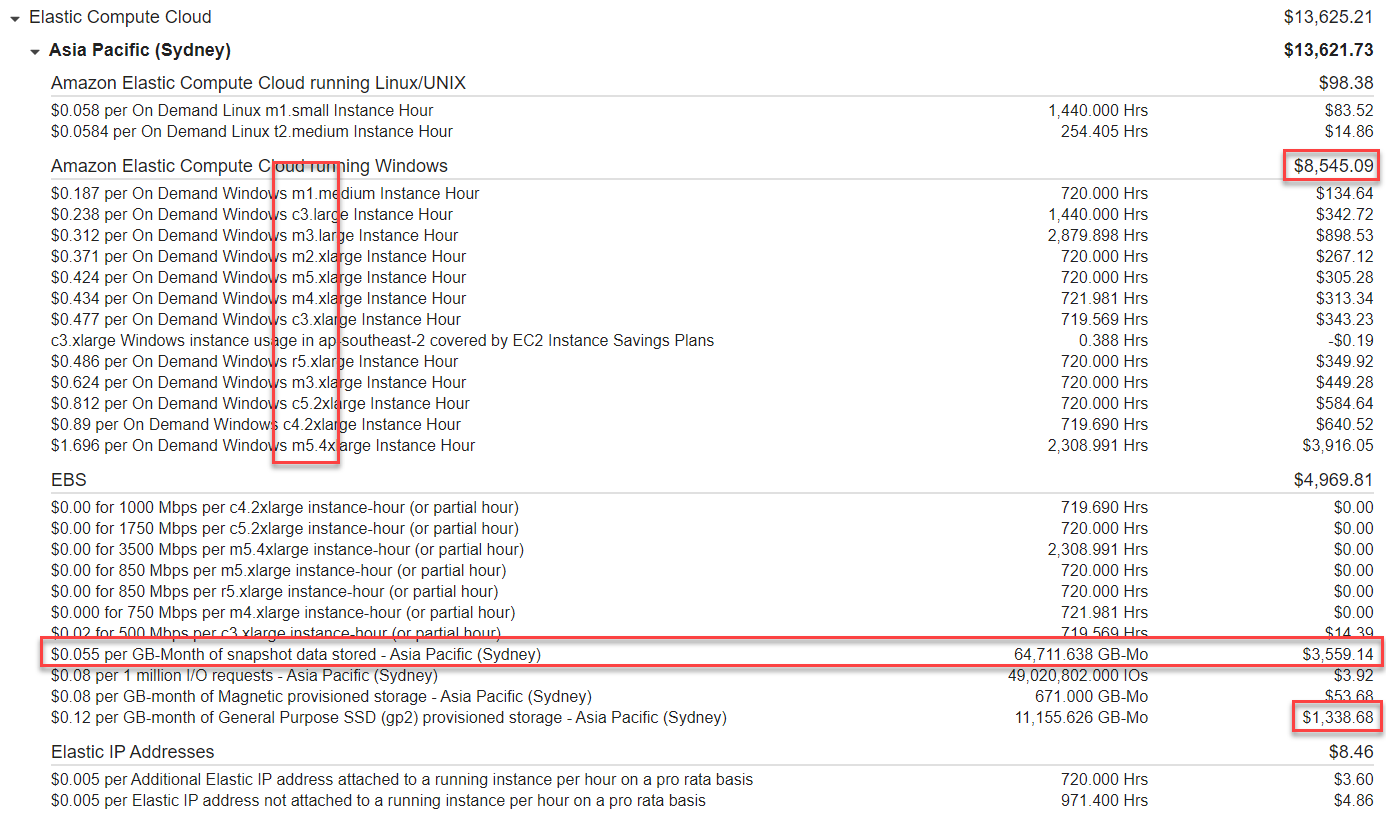

Let's compare the amount of snapshot storage we're paying for (about 65TB according to that screen shot) and how much EBS volume storage we're paying for (about 11TB). Something seems a bit off - there's a decent chance that there are some old snapshots that just aren't needed anymore.

Heading over to the EC2 console and drilling into the EBS snapshots shows us there are over 1800 snapshots, starting from 2013.

Let's assume that with the 11TB of EBS volumes we've got at the moment that there shouldn't be any more than 20TB of snapshot storage to have a full backup of everything and incremental backups for the past year or so. There might be some requirements to keep backups for longer, but you generally don't keep all of them - you'd normally just keep a handful.

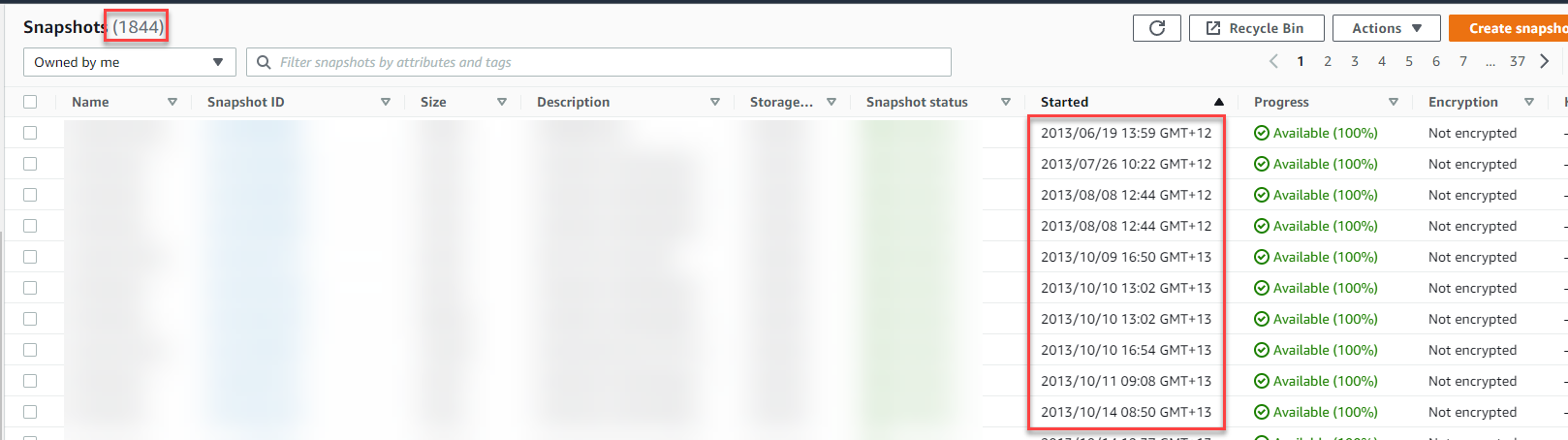

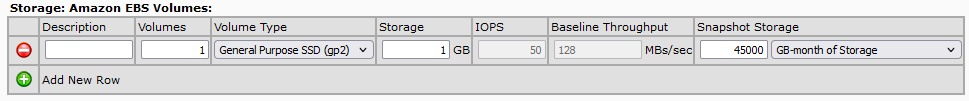

How much will deleting these old snapshots save us? If we assume we'll be deleting about 45TB we can plug this directly into the AWS Simple Monthly Calculator (the old one, I haven't converted to the new one yet).

Ooooff, if we convert that to NZ dollars we get $3,465.17 a month. Which is $41,582.01 a year.

Great start!

Total savings so far: $41,582.01

EC2 Right-Typing / Right-Sizing

The next thing to do is get a list of those EC2 instances, make some recommendations and figure out how much each of the recommendations are going to save the customer.

If there are no tools available to use, write your own! I whipped up a wee Python script that'll export the EC2 instances to a CSV.

Create ec2_export.py

#!/usr/bin/env python

import csv

import boto3

def main():

ec2_client = boto3.client('ec2')

response = ec2_client.describe_instances(MaxResults=50)

reservations = response['Reservations']

with open('export.csv', 'w', newline='') as csvfile:

writer = csv.writer(csvfile, delimiter=',')

header = ['instance-id', 'name', 'type']

writer.writerow(header)

for reservation in reservations:

instance = reservation["Instances"][0]

instance_row = [

instance["InstanceId"],

get_instance_name(tags=instance["Tags"]),

instance["InstanceType"]

]

writer.writerow(instance_row)

print(instance_row)

def get_instance_name(tags):

for tag in tags:

if tag['Key'] == 'Name':

return tag['Value']

if __name__ == '__main__':

main()I happened to know there was less than 50 instances in this AWS account, but if you're dealing with an account that has more you'll need to add in pagination to the script above. I have done a manual analysis for an account that had more than 100 instances in it, but you really want to start looking at some tooling when you get to those numbers.

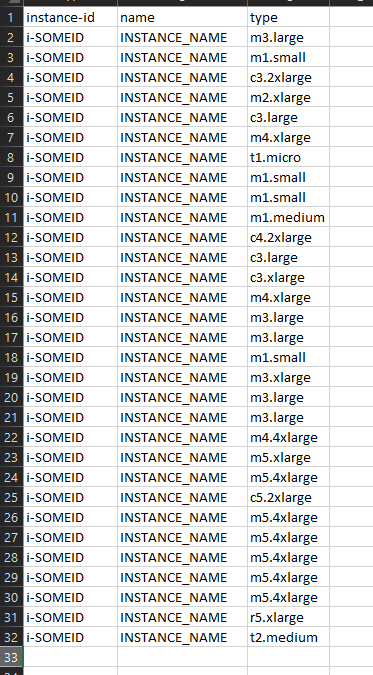

Running the script gives us a really simple export.

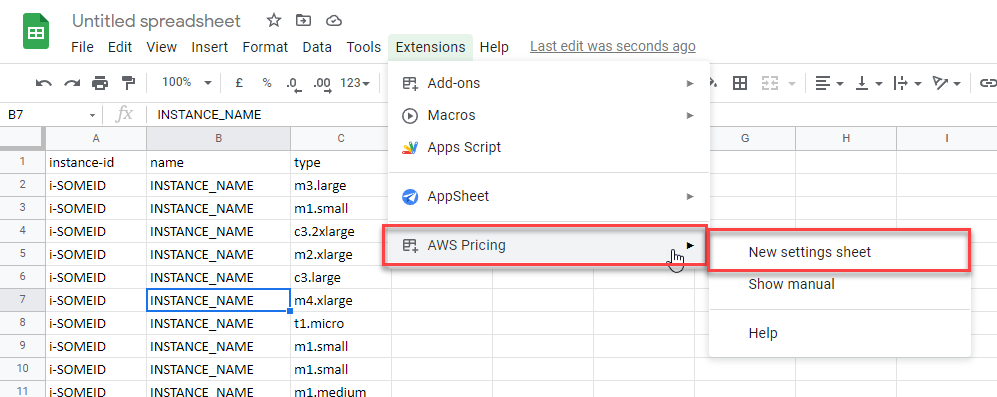

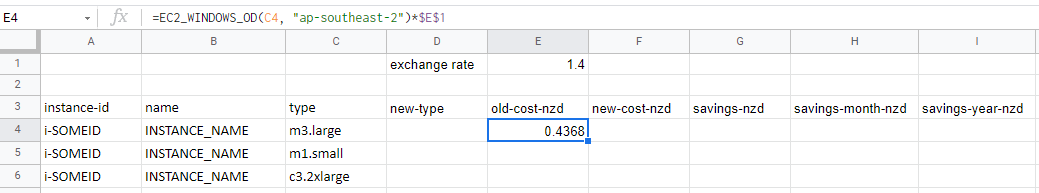

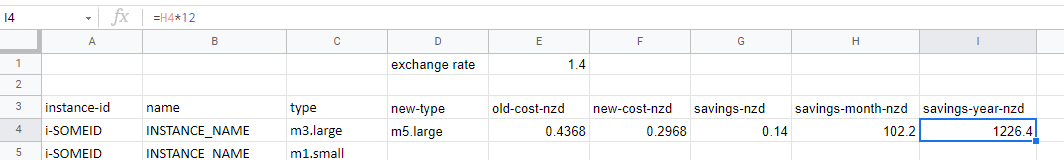

Now we need to figure out how how much of these instances cost. It turns out there is an AWESOME Google Docs plugin called AWS Pricing which allows us to call a function directly from Google Docs to retrieve the pricing info for that instance type.

Back in the day I was using a table scraper on the pricing page to get this info (it must have been before the pricing API?). Those were bleak times.

Install the plugin and copy the data from our CSV into a new Google Doc. You should be able to see the AWS Pricing plugin appear in the Extensions menu.

Click on Help for some instructions on how to use it.

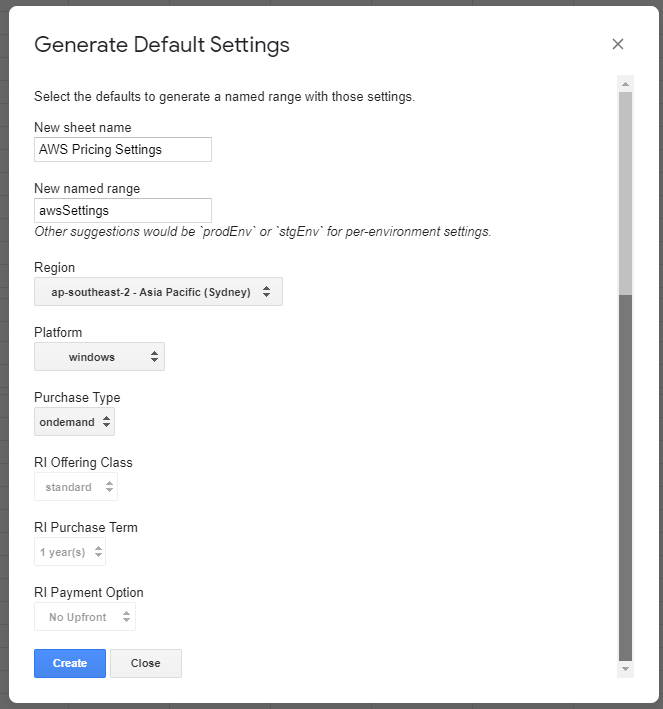

We're going to click on Generate Settings Sheet and tell it which region and instance type to use and then click Create.

Now we're ready to add some column headers and call our new function to get pricing info. Let's add the following headers:

- new-type

- old-cost-nzd

- new-cost-nzd

- savings-nzd

- savings-month-nzd

- savings-year-nzd

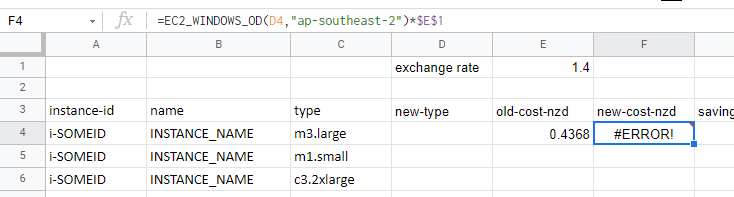

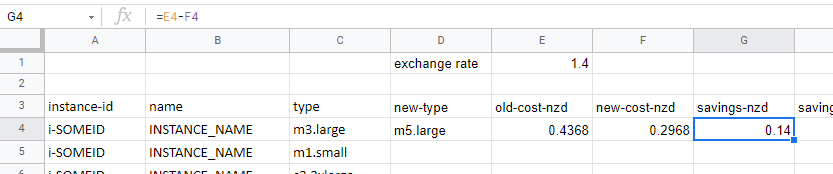

Set up some formulas that call our AWS Pricing function, and work out the values for each of the headers.

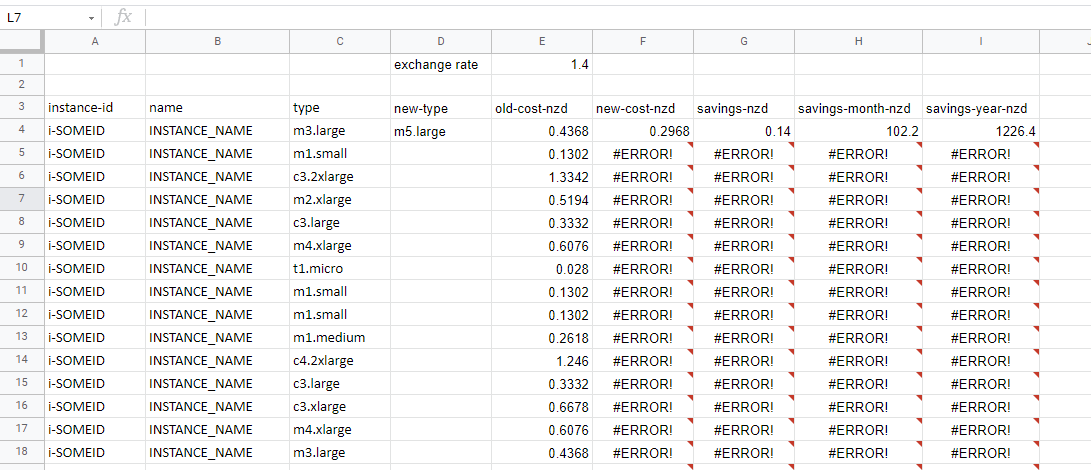

We need to put something in the new-type column so our plugin has something to look up. Otherwise it'll just say "ERROR".

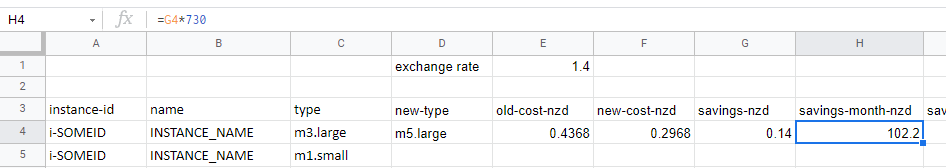

Now we can drag our formulas down so they apply to each of the instances.

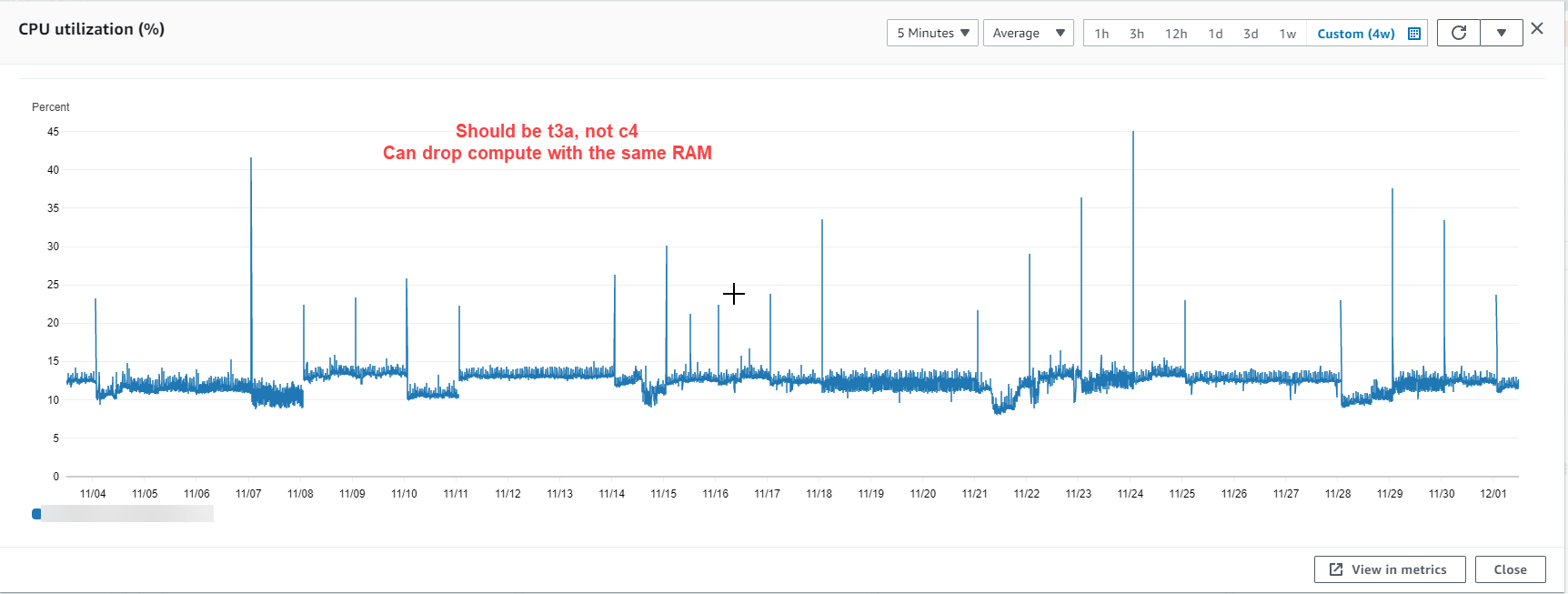

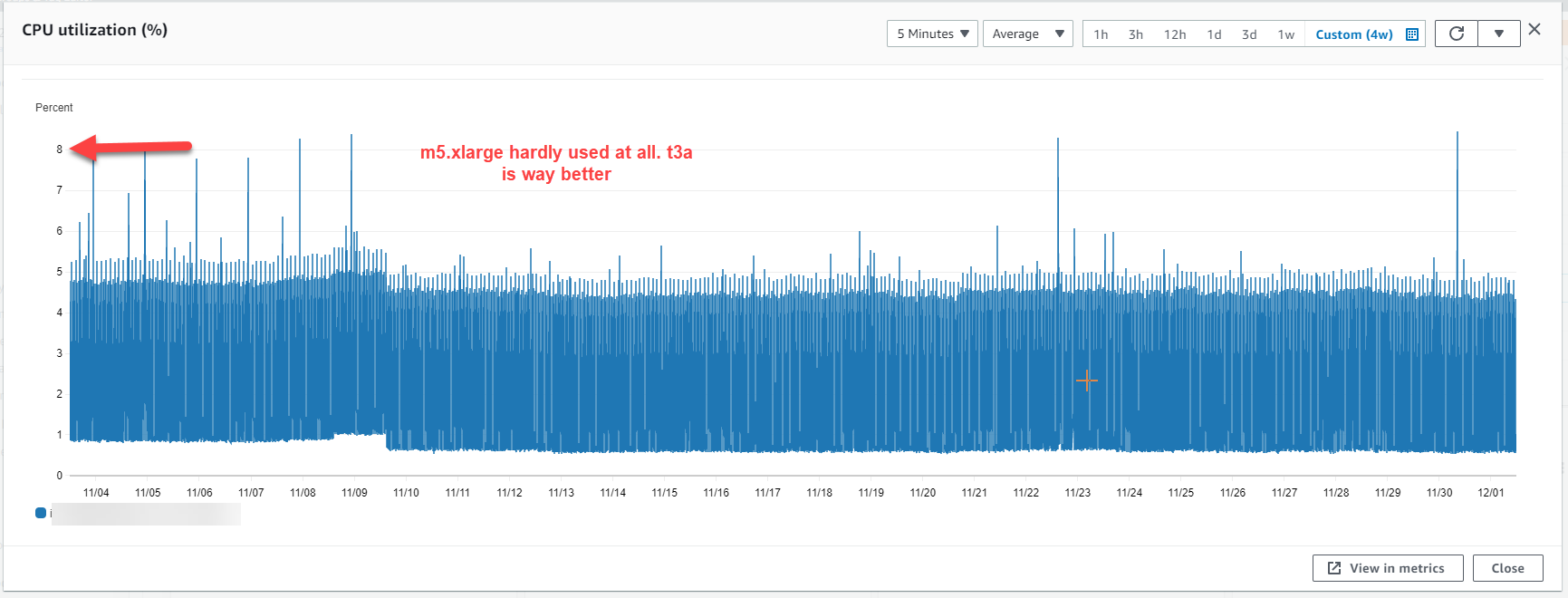

Now you can go and inspect each of the running EC2 instances and figure out if they're right-sized and right-typed. This customer didn't have any RAM metrics, so my recommendations were based on CPU utilisation only. This means that they're conservative, because there's a good chance that RAM isn't maxing out on all these instances.

There are some good AWS tools that can help with the analysis like Trusted Advisor, Compute Optimiser, etc. I didn't bother using these for this one, though, because this was pretty clear-cut.

Right-sizing

As a finalist in the doing-things-manually-that-should-be-automated awards, I went through each of the customers EC2 instances and inspected the CPU utilisation over the last 4 weeks, and noted the usage pattern (spiky vs constantly utilised). Based on this info, I made a judgement call on what the instance would probably be better suited as.

For this particular customer, the total in the savings-year-nzd column came out to $34,995.32. Phew, that's decent as well!

Total savings so far: $76,577.33

Reserved Instances and Savings Plans

Once we've right-sized our EC2 instances, we can look at Reserved Instances and Savings Plans. You can really spend a heap of time on Reserved Instances and eke out every last dollar, but when I'm doing high-level and rapid analysis I just ball-park a number.

If the customer implemented my right-sizing and right-typing recommendations their spend would be roughly $95k / year on compute, so conservatively I would estimate 20% savings on that number by implementing some flavour of Reserved Instances or Savings Plans with a no up-front commit, so roughly $19,000.

Total savings: $95,577.33

Summary

Working backwards from the AWS bill makes it easy to dial into the big-ticket items when it comes to optimising the cost of your AWS bill. And sometimes the savings are found where you least expect them!

Tooling is definitely better, but it's still possible to do some useful analysis using Python, Google Docs and elbow-grease.

This particular (hopefully happy) customers total potential savings ended up coming to a whopping 40% of their AWS bill. Not bad!