Massively Parallel Machine Learning Inference Using AWS Lambda

Posted by Chris McKinnel - 20 April 202112 minute read

Is it even possible?

TLDR; Oh, it's possible alright, and it is glorious!

I got introduced to CCL's Innovation Practice Lead, Josh Hobbs, sometime last year (thanks, Reg!) and he took me through some of the projects he had on the go. It's safe to say I had my little mind blown - he was doing some awesome stuff already and was planning some even more awesome stuff in the machine learning space.

He took me through the stuff his team had already done with a computer vision model they had trained, and explained that one of his team was spending an hour or two each Friday night getting his local machine set up so he could further train the model over the weekend using his single local GPU. The hardest bit of machine learning is getting a model trained well enough to do something useful for you (I don't know if that's true, but it seems true enough from what I've seen).

Actual of footage of Chuan Law's computer, training models on a single GPU

Actual of footage of Chuan Law's computer, training models on a single GPU

Chuan Law is one of CCL's Software Engineers that specialises in Machine Learning and Computer Vision development.

The first thing I said to him was "We've gotta get this into the cloud!" and his response was "Yeah, we're planning to put it on Azure in docker containers". Hmm. He took me through some of the numbers for running the inference on the Azure ML services, and I started to realise that doing ML stuff was probably going to be pretty expensive.

It turns out the original iteration of the inference was taking a video as input, iterating through each frame of the video and processing the model inference on each frame in order and then stitching the video back together for a nice output video with bounding boxes to show what it had found.

Pretty awesome!

I suggested at the time that we should be able to figure out a way to run the inference in parallel on the cloud, maybe even using something like Lambda.

I had no idea at the time whether or not this would be possible, and I didn't really even realise that we were using a GPU to do the inference because using the CPU was super slow (especially processing sequentially, maybe it wouldn't matter if it was slow if we could do it all in parallel?).

Anyway, I convinced Josh's team to give me access to the code under the guise of "I used to be a Python developer, I can do a code review for you!", but really I was already in the Matrix trying to figure out how we could run this bad boy across thousands of Lambdas at the same time.

I spent a little bit of time refactoring the code so I could split the video into frames initially, then run inference on a frame independently, and then put each of these functions into separate Lambdas on AWS.

Issues #1 - Python ML and video library size

Well, it turns out that OpenCV, Tensorflow and other libraries needed for

computer vision and machine learning using Python are rather large. Altogether

the libraries were like 3gb! Holy moly, we only get 250mb for a lambda (750mb

if you're a cheat and use the /tmp directory at runtime to get

another 512mb of usable disk space).

Not to mention the size of the model and the video itself. Hmm.

Luckily, I knew you could hook up EFS volumes to Lambdas which solved the disk space issue. So I fired up an EFS volume, copied the libraries, model, test video, etc, to the volume and hooked it up to my Lambdas.

Wahoo! The Lambda worked! I could chop a video up into frames, and I could run inference on each of the frames in a single Lambda. It was pretty slow, though. The libraries were taking over a minute to load, and then the inference was taking ages as well.

But that didn't matter (I thought), because we can just run thousands of these things at the same time which would bring the average processing time per frame down a heap.

I let Josh know that I had solved all of his problems and we would be able to run parallel inference on Lambda for super cheap compared to Azure, and to go and sell this solution to everyone he could... I even did a demo to show him that I could process frames in parallel. Job done, mate!

Me selling Josh the dream

Me selling Josh the dream

Meanwhile, I thought I'd better test this thing with a proper video. The one I was testing with was only a baby 43 frame video, so I threw a 65,000 frame one at it.

Hmm, no frames were appearing in S3 - so something had gone wrong.

Issue #2 - EFS is a bottleneck

Looking back, it's not surprising that this didn't work. But I was scratching my head at the time. Each lambda that was being fired up to execute inference on each frame had a heap of disk space, and it worked well enough on a smaller video - what was the bottleneck?

I had a quick look at EFS and then it hit me - EFS is effectively a network drive that gets deployed into your VPC, which means it gets an ENI attached to it. When we're executing a heap of Lambdas at the same time that are all trying to pull the Python libraries and videos from EFS, the network was getting smashed.

Sure enough when I had a look at the monitoring of the EFS volume it was red-lining. Damn, maybe I could provision some throughput so EFS would be able to handle a bit more traffic?

This idea lasted for as long as it took me to realise that this was going to be cost prohibitive. It cost $7.20 USD for a MB/s provisioned throughput, and if we were going to be running this thing at scale then we would need a heap more than that (see: $7200 USD / month for 1GB/s).

OK, so can we reduce the load on EFS so it's not getting so smashed? Maybe we can figure out exactly what Python libraries we need and what ones we can live without, and squeeze them into every spare bit of space we get with Lambda?

I trawled through a heap of docs and blog posts, and stripped every Python library I could out of the Lambda and got the size down to... 1.5gb. Still too big. It was mainly Tensorflow that was taking up all the space - it was 1.2gb by itself!

Then I found a really old blog post where someone had managed to get Tensorflow running in a Lambda by using an old version (1.2) of it that was less than 500mb.

After much swearing I finally got a version running where the Lambda downloaded

some Python libraries and model files to /tmp, and run the test

video without needing EFS. It took about 30 seconds to fully spin up, and then

took about 40 seconds to do the inference on the largest Lambda at the time (3GB).

Issue #3 - Cost

I did some maths and based on the number of frames we needed to process per month for the customer, the price point for Lambda alone was around $55,000 USD / month.

Sweet lord!

I also realised we couldn't use a Lambda to process the large 65,000 frame video, because chopping it up using OpenCV would just take too long and there wasn't a hope in having it done in the 15 minute Lambda timeout.

I sent Josh a tail-between-the-legs email with the subject "The Lambda dream is over". I had officially given up on processing our inference with Lambda. Boo.

It was about this time that Josh told me the project was going ahead in AWS, not Azure. He was confident that the AWS Practice would figure out how to make all this stuff work - especially since I had sold him the dream.

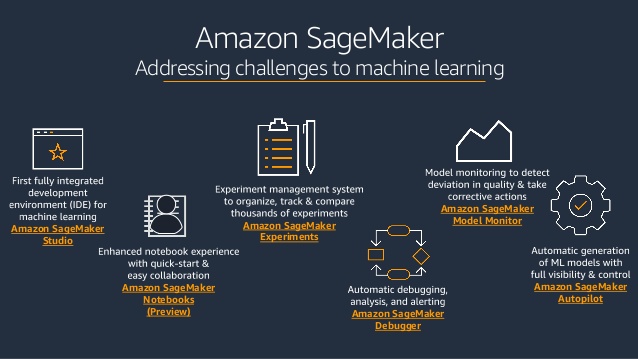

Throughout the process I had been in touch with a few people at AWS, and I had opened a few support cases to get advice. Everyone I talked to said I should be using SageMaker, not Lambda for my inference. So, that's where we headed!

Enter SageMaker!

We took the model we had trained (the royal "we", here - I didn't do any of the smart stuff) and put it in a Docker container in a format that SageMaker understood and got it deployed on AWS.

At this point the project was getting pretty serious so we bought a couple of new team members in to assist. I was no longer doing the doing, I was just staying involved to keep across things.

Issue #4 - GPU and concurrency

Bringing our own model to SageMaker and making it work with the GPU turned out to be a bit of a challenge. We would have issues when we attempted to get the instance to run multiple inferences at the same time (so more than one frame at a time), and when we tried to make the container use the GPU of the instance we would get a heap of what looked like GPU RAM related issues.

We had demoed the solution to a customer at this point, and we had resorted to just using the CPU of the SageMaker instance to do inference which was super slow.

Bleak times!

I reached out to a few people at AWS but it was pretty hard to pin someone down that had specific experience with these issues, and without deep diving with us couldn't really offer assistance over email or quick video calls.

We started to realise that the issues were likely with the way we were building the container, and the libraries and drivers we were attempting to use.

After a call with an SA in Aussie that had a bit of experience, he steered us toward not using the Bring-Your-Own model use-case, but instead to train the model using SageMaker and use the out-of-the-box inference containers that SageMaker gives you. He basically said "let SageMaker do the work for you, and only use BYOM if it can't do what you need it to".

This turned out to be good advice, and we re-trained our model using some different algorithms and training data which solved our GPU and concurrency issues. Great! Although without scaling the SageMaker instances horizonally, we were still processing frames pretty slowly.

While scaling SageMaker horizontally is definitely possible, it gets pretty expensive, pretty quickly. I would have preferred to use Elastic Inference as well, but of course it's not available in the Sydney region yet.

We didn't end up going down this route, as once we got SageMaker actually doing inference successfully for us, we focused on the video being split into frames part of the problem.

Because we had videos that are up to 65,000 frames long, how could we split them into frames in a scalable, fault tolerant and timely way?

Issue #5 - Video splitting

We must have went through 5 different iterations for this part of the project. We went from using a split between ECS (Fargate) and Lambda, to fully Lambda, back to various combinations of ECS, DynamoDB, Step Functions and Lambdas.

It turns out it's hard to chop videos into frames and feed the frames into a processing step quickly, while at the same time being able to scale up or down and error handle individual frames, all at scale - up to 1 million frames a day. Especially when you're dealing with videos that are larger than the RAM and disk space of the Lambdas (although throughout the process of the project, the Lambda RAM limit was increased to 10GB, as well as the disk size - more on this later).

AWS probably has a service for that

I was running an unrelated Immersion Day in Christchurch and a couple of legends from AWS turned up to support us deliver it. One of them was an SA that just happened to have done some recent work with videos and splitting them into frames.

It turns out there is an AWS service called Elemental MediaConvert that did exactly what we were trying to do. The smart folk at AWS took care of all the heavy lifting for us - we could just give it a video and then it would magically get split into frames for us.

I don't know how I didn't find this service when I was Googling for it, but nevertheless we were stoked that it existed and we could scrap the Lambdas, Step Functions, Queues and everything else we were using to try and get a production-ready video processor.

We just configured the service to use GPU and we were processing our biggest videos in around 5 minutes!

Maybe the Lambda dream isn't over

While we were battling through the project and working around the issues above, as well as a heap more that I don't need to document here, the folk at AWS came to the party and added support for 10GB Lambda images in Sydney. They also increased the RAM limit of Lambdas to 10GB for good measure.

Wahooo! That means our storage space problem goes away!

Josh gave me a call one day and said "You'd better give Thomas a call". Thomas Claridge is our AWS Engineer on the project. "He's got some good news. Something about a Lambda and not needing SageMaker anymore".

With the latest feature releases in Sydney for Lambda, Thomas had managed to get a Lambda running the inference for the new model (which turned out to be way faster than the old model) in around 18 seconds per frame.

With Elemental MediaConvert processing the whole 65,000 frame video in around 5 minutes and saving all individual frames to S3, and our Lambdas being triggered by a CloudWatch Event for each of those frames, we were hitting the Lambda concurrency limit.

More power!

We increased the Lambda concurrency limit to 3000 executions, which meant we could process 3000 frames at the same time - as long as MediaConvert could spit them out quick enough. The bottleneck was actually how fast we could process the original video into frames, not the ML inference!

We (Thomas) managed to process the whole 65,000 frame video and perform inference on all frames in the video in a ridiculous 4 minutes and 12 seconds.

Once we told Josh the news, his comment to me was "Oh, you got out-AWS'd, son!".

Fair enough!

A better model. Faster, stronger.

Our data nerds didn't want to be outdone, so they rewrote the model and retrained it so inference only takes 1.5 seconds per frame now (whaaaaaat!?), and the other 1.5 seconds is doing boring stuff like talking to DynamoDB and saving images to S3.

Yep, you read that right, 3 seconds per frame. Waahoooooooooooo!

And, as an added bonus, we don't need the extra Lambda concurrency now because the model is so rapid.

Lessons learned

All projects, not just Machine Learning ones, are normally a process of iterating on ideas and implementations to get to the final state.

Just because you can do something in 2 hours because you know exactly what you're doing and have done it before, doesn't mean the solution is equal to 2 hours of billable time.

This solution is going to save one of our customers millions of dollars in various areas of their business - and if we were to build it again tomorrow, it would take 1/10th of the time it took us to get to where we are.

Don't give up!

If the dream isn't possible with the tech you've got at the moment, keep iterating and you might get to a point where you can rearchitect with new and unknown features and services to blast through what you thought was impossible.

Don't sell the dream?

I guess there's a lesson in here somewhere about not selling the dream before knowing it's possible... but I didn't learn it from this project. I'm a big fan of selling the dream and then throwing the problem over the fence to smart people to solve it! Just kidding (sort of).

Ask for help

AWS have some pretty smart people, and they're willing to help. In the past I probably would have went into the tank and tried to solve the worlds problems myself, but these days I reach out sooner rather than later to see if there is a quicker and easier solution to me architecting everything myself.

As AWS consultants, AWS want us to accelerate the adoption of high-value cloud services for our customers, and they'll come to the party and help us do that wherever they can.